近期研究 Recent Research

A Robot System for Indoor Environment Question Answering with Cognitive Map Leveraging Vision-language Models

With the aging population increasing, the demand for elder care is rising rapidly, making the need for robots in human society more urgent than before. Hence, embodied question answering (EQA) tasks have gone viral in recent years. In this study, we propose a hierarchical architecture to effectively utilize and enhance the capabilities of existing vision-language models to achieve a question-answering system for the real-world indoor environment and further construct the environmental memory that existing EQA systems lacked. The robot system has four main capabilities: 1) Understanding the objects in the environment and their states through the pre-trained vision backbone, 2) Understanding human natural language question and achieving bidirectional correlation between vision and language, 3) Autonomous exploration of the environment, and the construction of cognitive maps, 4) Updating and utilizing the cognitive map to assist navigation and answer questions. In addition, we also propose an innovative zero-shot method that enables pre-trained vision backbone models to have visual place recognition capabilities, ensuring that the robot can locate itself on the cognitive map and update the map correctly.

Spatial Graph-based Localization and Navigation on Scaleless Floorplan

Effective navigation in unfamiliar environments is a critical challenge for successful deployment. Current navigation methods, which rely on autonomous or teleoperated exploration and map building, pose technical difficulties for end-users. In contrast, humans can effectively navigate using abstract floorplans, suggesting the potential for service robots to leverage similar techniques. The practical application of floorplan-based navigation, however, is currently limited by methods that require exact measurements or scale and significant pre-exploration. This thesis aims to address the aforementioned challenges and investigate the feasibility of floorplan-based navigation for service robots in unexplored environments. Specifically, we propose a novel scale-invariant floorplan localization method, enabling navigation without relying on precise scale information. Furthermore, we introduce an incremental graph augmentation approach that enriches the floorplan representation with traversability and semantic information derived from robot observations. Finally, we develop an efficient navigation framework capable of utilizing both the inherent structure of the floorplan and real-time observations. The outcomes of this research contribute to the advancement of service robot deployment in unexplored environments, particularly in scenarios where extensive exploration and map building may be impractical or technically challenging for end-users.

Object-Goal Navigation of Home Care Robot based on Human Activity Inference and Cognitive Memory

As older adults' memory and cognitive ability deteriorate, designing a cognitive robot system to find the desired objects for users becomes more critical. Cognitive abilities, such as detecting and memorizing the environment and human activities are crucial in implementing effective human-robot interaction and navigation. Additionally, robots must possess language understanding capabilities to comprehend human speech and respond promptly. This research aims to develop a mobile robot system for home care that incorporates human activity inference and cognitive memory to reason about the target object's location and navigate to find it. The method comprises three modules: 1) an object-goal navigation module for mapping the environment, detecting surrounding objects, and navigating to find the target object, 2) a cognitive memory module for recognizing human activity and storing encoded information, and 3) an interaction module to interact with humans and infer the target object’s position. By leveraging big data, human cues, and a commonsense knowledge graph, the system can efficiently and robustly search for target objects. The effectiveness of the system is validated through both simulated and real-world scenarios.

Household Robot Utilizing Location Information for Human Activity and Habit Understanding

This research paper introduces an innovative integrated system that synergistically combines location estimation, human activity recognition (HAR), and plan recognition. To enhance the performance of HAR, a novel location estimation system is proposed, which involves the fusion of a scene recognition model and a custom-designed estimator utilizing distance metrics between humans and objects. By integrating the location information derived from this location estimation system with human skeleton data, we proposed an AL-GCN model for the HAR system, which considers the spatial context offered by the location information for improved recognition accuracy. To investigate the activities further, we proposed a plan recognition system that updates the knowledge base and considers human habits to make three predictions, including the next activity, objective, and plan. In our experiment, we evaluated our system on both datasets and real-world scenarios. Our location estimation system performs best in dataset evaluation with a 92.83%.

User Intent-driven Navigation of Home Service Robot based on Semantic Scene Cognition

To let the mobile robots be able to adapt to different environments and to provide various services to human users, environmental cognition, the ability of reasoning and decision-making, and navigatability of robots are particularly important. In addition, a service-oriented robot should also be able to understand what a user intends through his/her utterances so that it can make appropriate responses during human-robot interaction. In this work, we propose a user intent-driven navigation framework, which 1). starts with the building of a cognitive map of an indoor environment after autonomous exploring of it using LiDAR plus RGB-D camera based on a SLAM algorithm and the proposed scene recognition network, 2). followed by using a natural language dataset to construct a semantic knowledge graph that associates various object identities with their major functions over different scenes. Based on such framework, the robot can naturally figure out the user’s intent, infer the target scene and the associated specific location to head to, and then search through the topological nodes on the cognitive map with the A* algorithm to come up with a natural path leading to the goal location so that the user’s intended services can be successfully delivered, e.g., leading a person to the bed in a bedroom for a rest. To validate the effectiveness of the proposed framework, several experiments have been actually conducted using a home-made mobile robot, and highly promising results have been collected.

Vision-based Gait Analysis Robot for Fall Prediction

In response to the growing concern about elderly healthcare in our aging society, particularly the prevalence of elderly individuals living alone, we face increasing accidents, with falls being the leading cause of injury and, tragically, even fatalities. The development of a fall prediction model has emerged as a crucial solution. This model assesses the user's gait characteristics well before a potential fall incident, allowing ample time for alerting the elderly and implementing preventive measures. Our innovative approach involves the creation of a mobile robot companion that accompanies the user within their home environment. Using the OC-SVM algorithm, it employs an RGB-D camera to capture and analyze the subject's gait parameters, subsequently categorizing them into high-risk and low-risk groups. Building upon this foundation, we introduce a fall risk prediction system centered around depth camera technology, poised to deliver sustained gait health monitoring services for elderly individuals in their homes, ensuring their well-being for the foreseeable future.

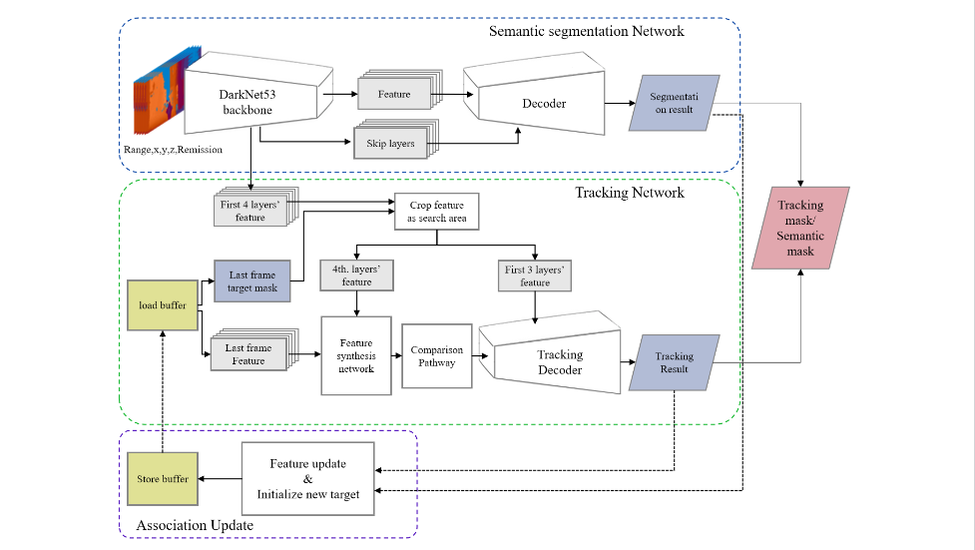

A 3D-LiDAR Multi-Object Segmentation Tracker Applicable to Human-Robot Interaction

With the trend of an aging society and declining birth rate, there is an increasing demand for home environmental care and the provision of services in a public setting. In recent years, due to the impact of COVID-19, there is also a need for these services to become unmanned. As a possible solution to these needs, service robots require greater cognitive abilities to handle increasingly complex human-robot interaction(HRI) tasks. In this study, we propose a perceptual system that can perform semantic segmentation of the current environment and multi-objective tracking of pedestrians in the environment. Different from the previous method that involves point cloud tracking based on Kalman filter, our method is based on feature comparison using deep convolutional neural network. Firstly, the current 3D LiDAR point cloud is transformed into Range Image by spherical projection, and then semantic segmentation prediction is carried out after the image is input into the semantic segmentation network. Afterwards, the prediction and the target feature of the previous frame are input into the tracking network to obtain the tracking prediction as our current frame, and then the target feature is updated after this process. At the same time, we also develop a semantic segmentation dataset of indoor 3D LiDAR point cloud, and get good results on this dataset. To validate our proposed work, this system is applied to our home made robot, and the pedestrian leading task is successfully conducted.

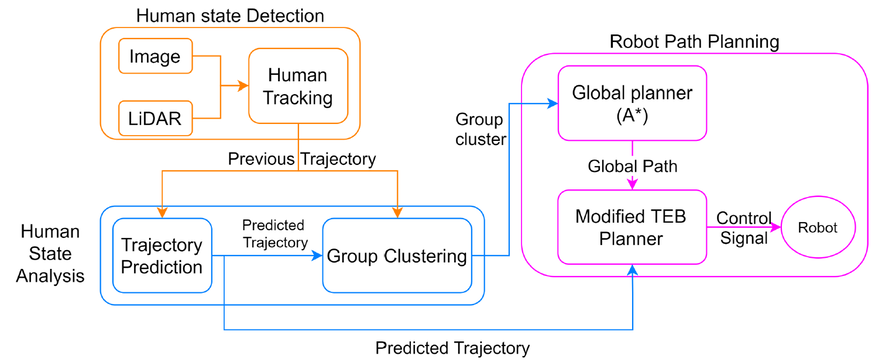

Social Crowd Navigation of a Mobile Robot based on Human Trajectory Prediction and Hybrid Sensing

In this study, we propose a hierarchical path planning algorithm that first captures the local crowd movement around the robot using RGB camera combined with LiDAR and predicts the movement of people nearby the robot, and then generates appropriate global path for the robot using the global path planner with the crowd information. After deciding the global path, the low-level control system receives the prediction results of the crowd and high-level global path, and generates the actual speed control commands for the robot after considering the social norms. With the high accuracy of computer vision for human recognition and the high precision of LiDAR, the system is able to accurately track the surrounding human locations. Through high-level path planning, the robot can use different movement strategies in different scenarios, which enables the robot to move more flexibly in various situations, while the crowd prediction allows the robot to generate more efficient and socially acceptable paths. With this system, even in a highly dynamic environment caused by the crowd, the robot can still plan an appropriate path reach the destination without causing psychological discomfort to others successfully.

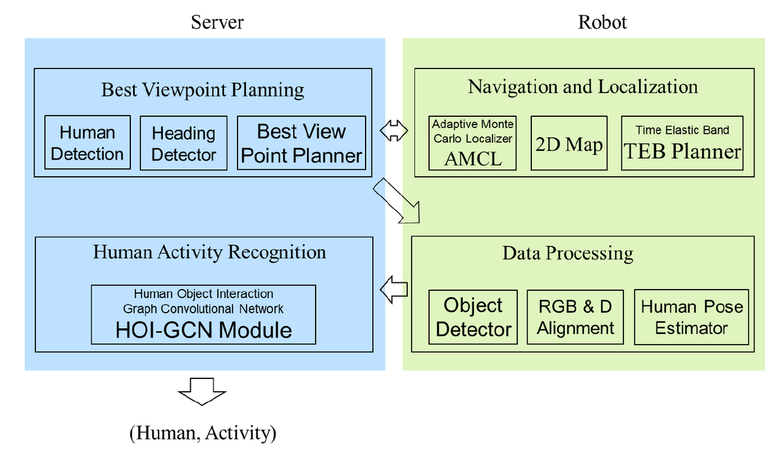

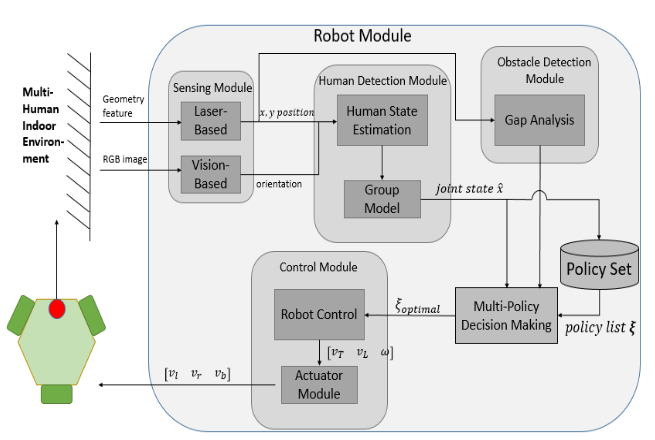

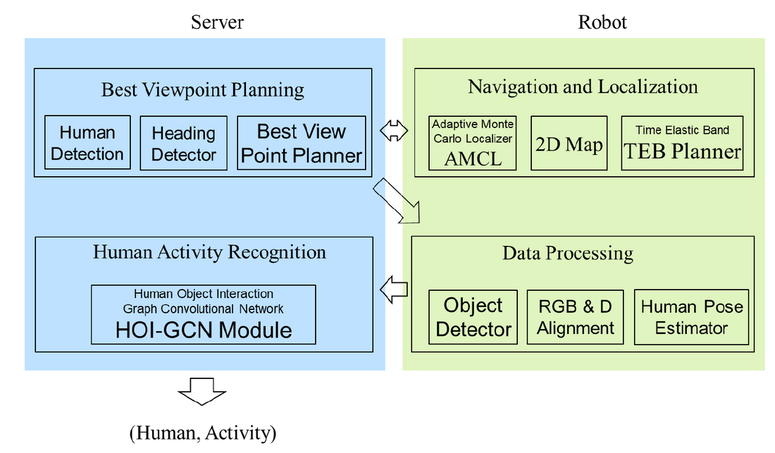

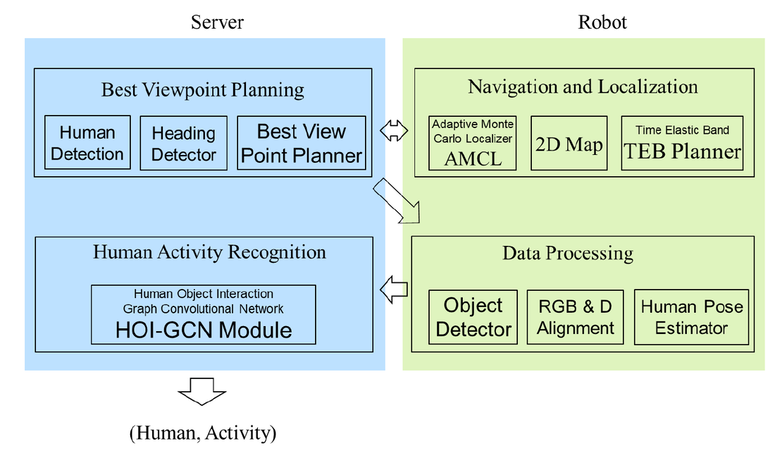

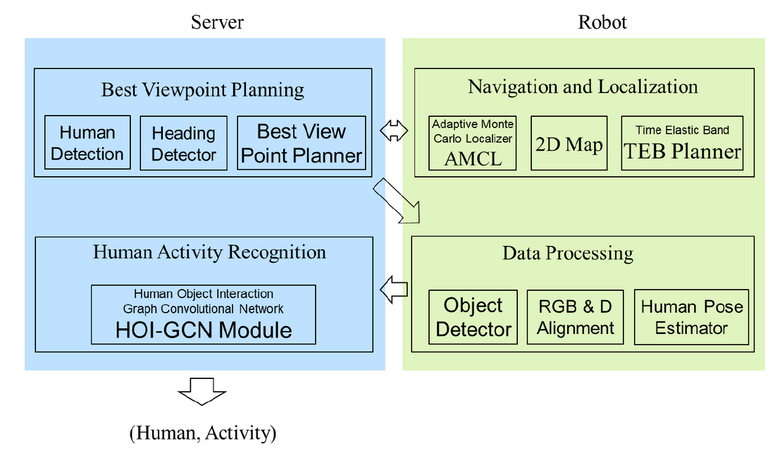

Human Activity Recognition System for Home Service Robot Utilizing Adaptive Graph Convolutional Network and Human Object Interaction

In this study, we propose a human activity recognition system called human-object interaction graph convolutional neural network model (HOI-GCN) based on adaptive graph convolutional network, human-object interaction, and object recognition to understand human behaviors in the surroundings. This model can predict human behavior in the indoor environment by input three-dimensional human skeleton and reference points of objects in the environment simultaneously, thus achieve better accuracy and robustness than before. Meanwhile, we divide the whole architecture into two parts. One involves image and privacy, which will be inferred on the robot, and the other performs complex human activity recognition which does not involve privacy data and will be run on the back-end server. By proposing this method, we eliminate the privacy concerns of the system. In the experiments, our architecture has achieved excellent accuracy in the dataset better than before.

Finally, we deployed the HOI-GCN model on the robot and proposed a statistical-based best viewpoint planning system to efficiently find the clearest observation point on a two-dimensional plane that is not obscured by furniture and navigates the robot to the point for observation, thus improving the overall accuracy of the HOI-GCN system in the real home environment.

Finally, we deployed the HOI-GCN model on the robot and proposed a statistical-based best viewpoint planning system to efficiently find the clearest observation point on a two-dimensional plane that is not obscured by furniture and navigates the robot to the point for observation, thus improving the overall accuracy of the HOI-GCN system in the real home environment.

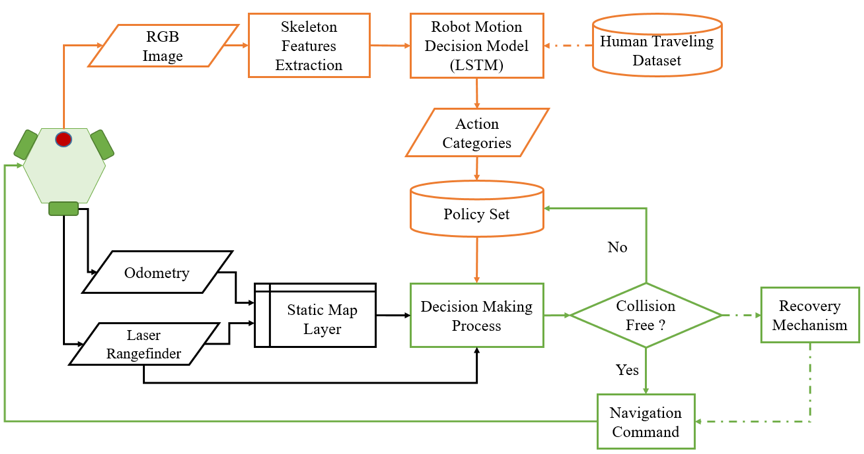

Walk Like a Human: Social Navigation of Omnidirectional Robot Based on First Person View

This thesis proposes a learning-based social navigation method in populated indoor environment for an omnidirectional robot. The aim is not only to produce an avoidance strategy directly from the images the robot perceived in first person view in order to perform the social friendly motions which are learnt from human, but also to combine the human avoidance and static obstacle avoidance in order to finish the whole navigation objective. To achieve this goal, we first wear the RGB camera and IMU to collect information of pedestrian skeleton and the walking strategy of collector, respectively. Henceforth, we build a human traveling dataset via preprocessing the data we collect. Then we utilize the human skeleton and distance as inputs to train an LSTM model to obtain robot motions. Furthermore, through the laser-based simultaneously localization and mapping to realize static obstacle avoidance and dynamic human avoidance simultaneously in complicated indoor environment.

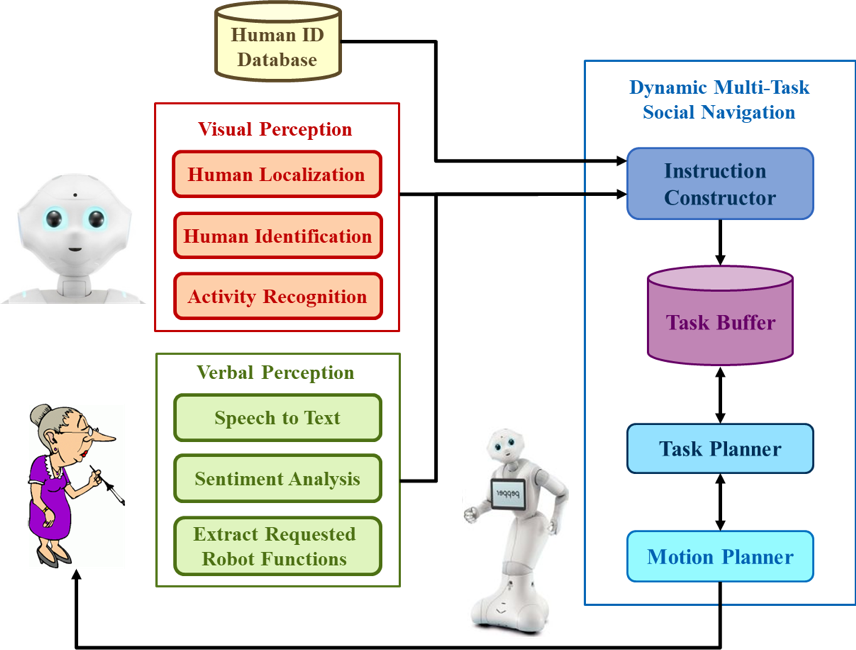

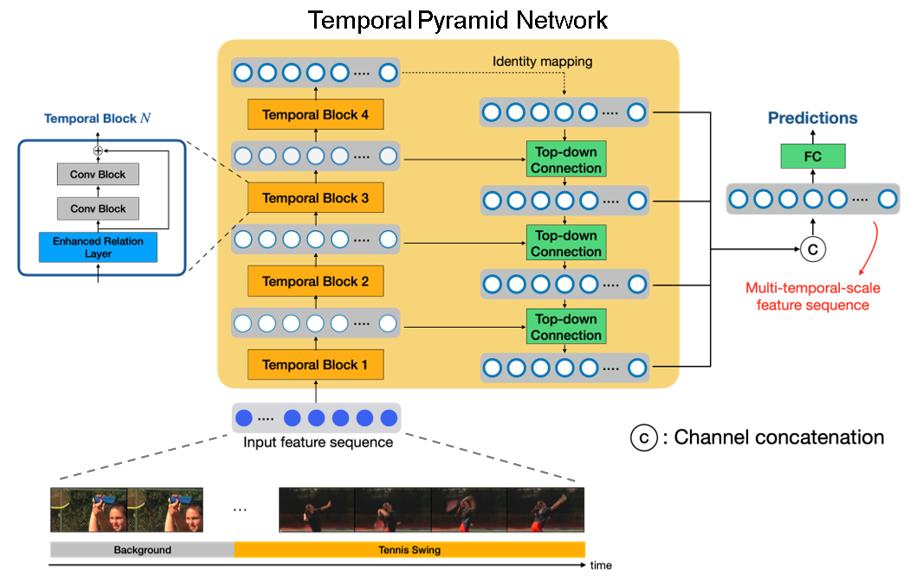

Optimal Navigation System for a Mobile Robot to Execute Dynamical Multiple Social Tasks

In this thesis, inspired from the Dynamic TAMP framework, we propose a novel task-oriented navigation system for robots to achieve social interaction tasks with the help of perceptions. To organize these social tasks, we propose an instruction structure consisting decaying reward with regard to priorities and time. Moreover, we model the indoor scenario into a graph structure to allocate instructions, and propose a task planning algorithm that considers not only the priorities among multiple tasks but also time efficiency through optimizing the accumulative reward. As for the perceptions that help assign priorities of instructions, we propose a sub-system for human localization, identification, and framewise hierarchical activity recognition in the visual aspect. As for verbal perception, we design a sub-system to understand human words as well as sentiments. Note that under the limited computational speed and resource, the system aims to simultaneously perform perception and decision making using both deep learning modules and heuristic algorithms. With the help of our system, the social robot is able to not only meet human requirements but also interact with people in a multiple-human environment efficiently, achieving sophisticated human robot interaction (HRI).

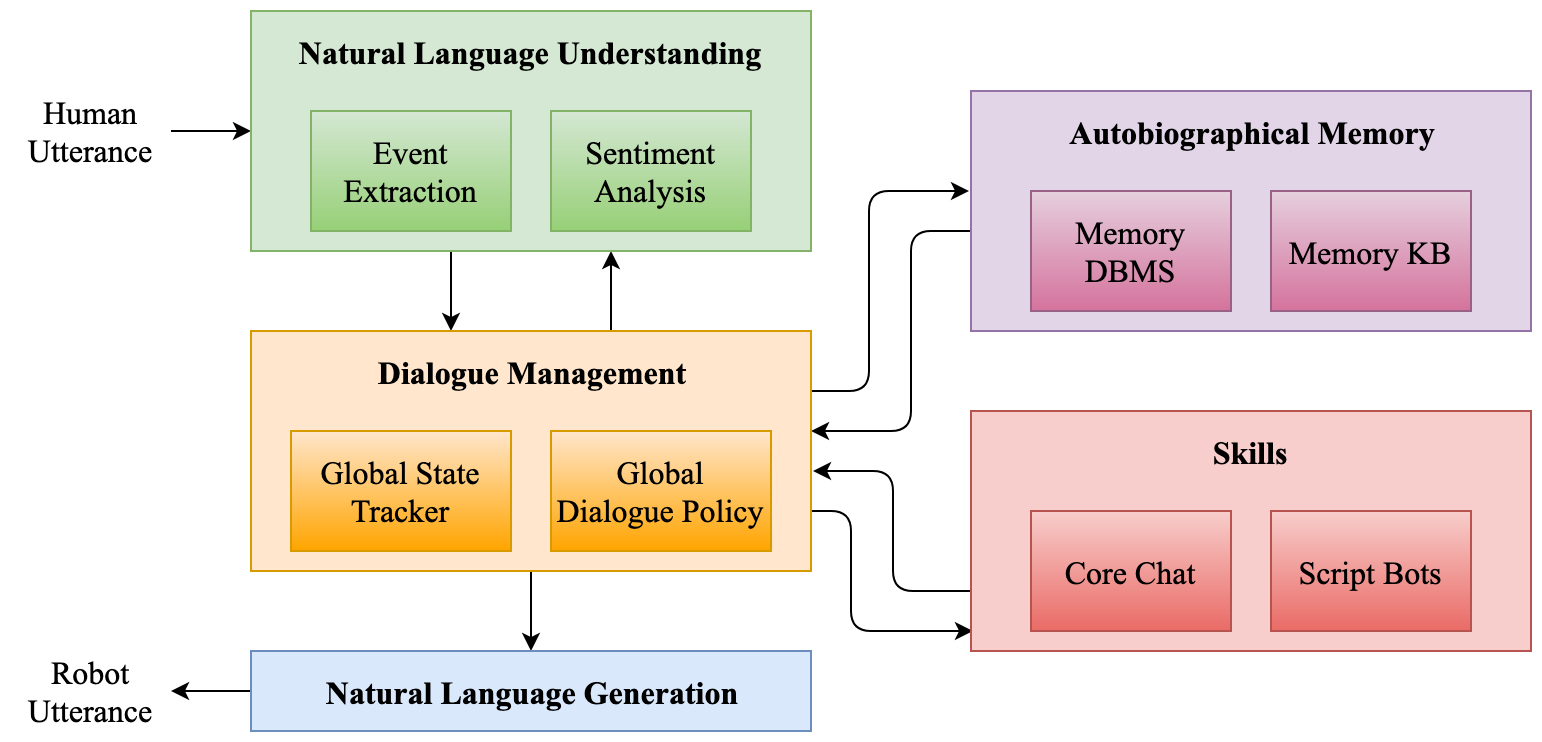

Dialogue System Assisted by Autobiographical Memory

Social robots can especially provide elders with mental support through communication. This work aims to build a computational memory model based on psychological autobiographical memory model and embed it into the dialogue system. In this way, we give robots the ability to memorize human’s personal information as well as to generate relevant responses. In this work, we special designed Natural Language Understanding (NLU) module to extract memory items from human utterances. Next, we managed to embed memory items into robot’s utterances by script or deep learning techniques (TA-Seq2seq), depending on the predefined scenario.

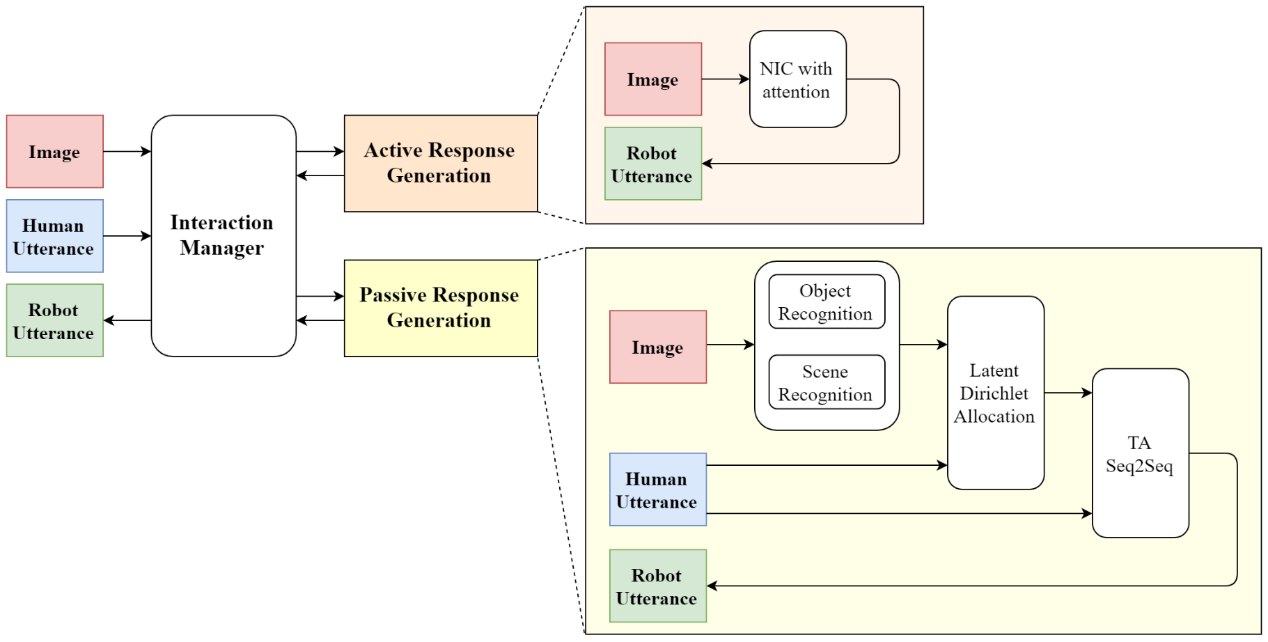

Visual Chat: Image Grounded Chinese Response Generation

With the gradually aging society and lack of labor, the issue of accompanying elders is getting more and more attention. We observe that people are capable of discovering topics from their surroundings, that is, talking about what they see, but robots are still lacking such ability. So we develop a dialogue system that can incorporate visual information into dialogue using deep learning techniques (YOLO, VGG16-places365 and TA-Seq2seq) and LDA. Moreover, the system is composed of active response generation and passive response generation so as to deal with different situations.

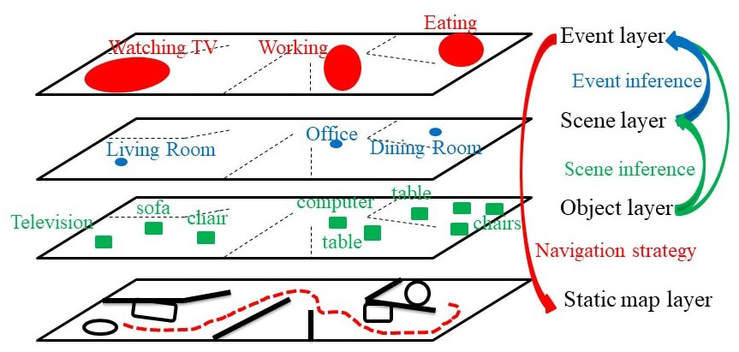

Robust Indoor Localization, Human Event Detection and

Socially Friendly Navigation Based on Multi-Layer Environmental Affordance Map

This research proposes a novel system architecture that includes "localization subsystem", "perception subsystem", "inference subsystem" and "navigation subsystem". In the "localization subsystem", this research proposes an innovative method to fuse two different kinds of Simultaneous Localization And Mapping (SLAM) algorithms, which can achieve more robust localization ability. On the other hand, in the "perception subsystem", the robot uses the neural network method to realize object recognition, which is combined with the depth image to confirm the spatial position of the object in the space related to the map in "localization subsystem". As for the “inference subsystem”, we propose a probability model. This probability model will make the best inference based on the “Affordance” provided by the user and the data of the environment. The advantage of this system is that the robot is able to make good inferences without a large amount of training data and training time like neural network, and the inference results will behave differently according to the information provided by the user.

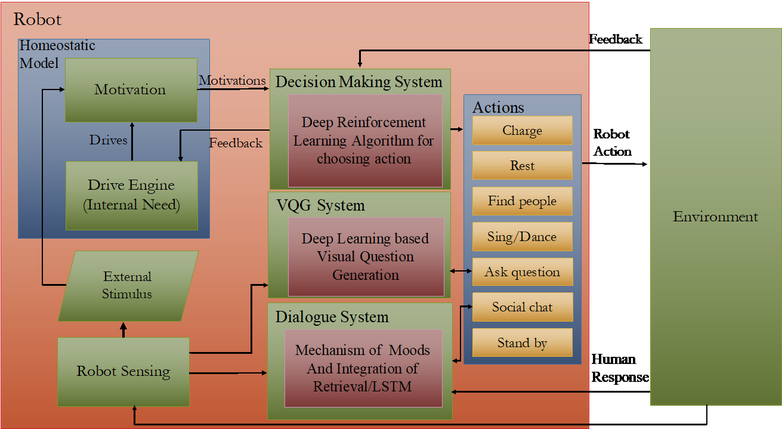

Autonomous Social Companion Robot with Moods

To meet the objective that the robot behave as human, a novel system based on several kinds of deep learning algorithms is proposed. Firstly, the homeostatic theory and Maslow’s hierarchy of needs have been adopted to model the robot, and we build the autonomous system for robot to know what to do at every time moment. Secondly, we build up a dialogue system, including not only basic Question-Answering abilities but also Chit-Chatting, with mood mechanism and text style translation model for improving the relationship between robot and human. Thirdly, we develop visual question generation capability such that robot knows how to propose diverse questions to human for what it has observed.

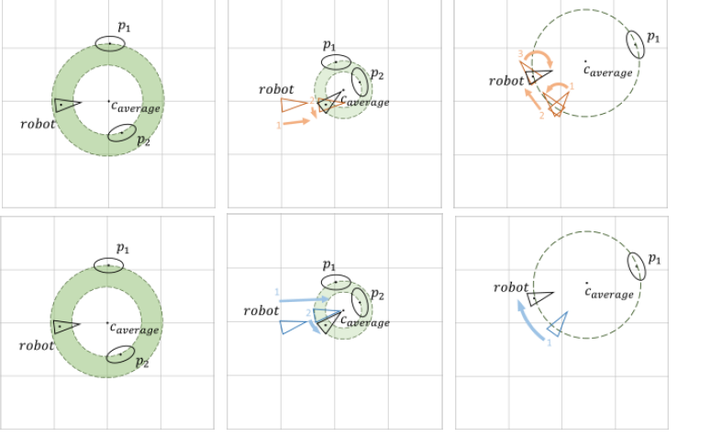

A Socially-Aware Navigation of Omnidirectional Mobile Robot with Extended Social Force Model in Multi-Human Indoor Environment

This thesis proposes a new navigation for an omnidirectional mobile robot to maneuver in a complex and populated environment. In an indoor populated environment, robot has to react to such circumstances and achieve both physical and psychological navigational safety. Different from the past, we consider the feature of omnidirectional movement into Social Force Model and propose an extended rotation social force. With this extension model, this framework takes “human comfort”, “nature” into consider and is able to navigate in a complex and populated environment.

Map-less Indoor Visual Navigation based on

Image Embedding and Deep Reinforcement Learning

We propose a novel structure, where objective is to achieve large-scale environment navigation in the indoor environment without pre-constructed map. The large-scale indoor environment needs good understanding to work for complex spatial perception, especially when the indoor space consists of many walls and doors which might occlude the view of robot. By the proposed hierarchical deep reinforcement learning and image embedding space generated by auto-encoder, our method can achieve large-scale indoor visual navigation without extra map information and human instruction.

Hybrid Interactive Reinforcement Learning based Assistive Robot Action-Planner for the Emotional Support of Children

Socially Assistive Robotics (SAR) is an emerging multidisciplinary field of study that has potential applications in education, health management and elder care. We propose an interactive decision-planning module developed for RoBoHoN with the goal of exploring the feasibility of using a robot to engage with children.

In order to validate the usefulness of the proposed methodology, we have conducted a pilot study on healthy elementary school aged children. Based on the results, our platform has the potential of being implemented using the developed Wizard-of-Oz interface in a real environment (e.g. classroom or hospital).

In order to validate the usefulness of the proposed methodology, we have conducted a pilot study on healthy elementary school aged children. Based on the results, our platform has the potential of being implemented using the developed Wizard-of-Oz interface in a real environment (e.g. classroom or hospital).

Adaptive Behavior Learning Social Robot Navigation with

Composite Reinforcement Learning

Recently, deep reinforcement learning is applied to the robotic field. For service robot, we propose the composite reinforcement learning (CRL) system that provide a framework that use the sensor input to learn how to generate the velocity of the robot. The system uses DRL to learn the velocity in a given set of scenarios and a reward update module that provides ways of updating the reward function based on the feedback of human. The CRL system is able to incrementally learn to determine its velocity by given rules. Also, it will keep collecting human feedback to keep synchronizing the reward functions inside the system to the current social norms.

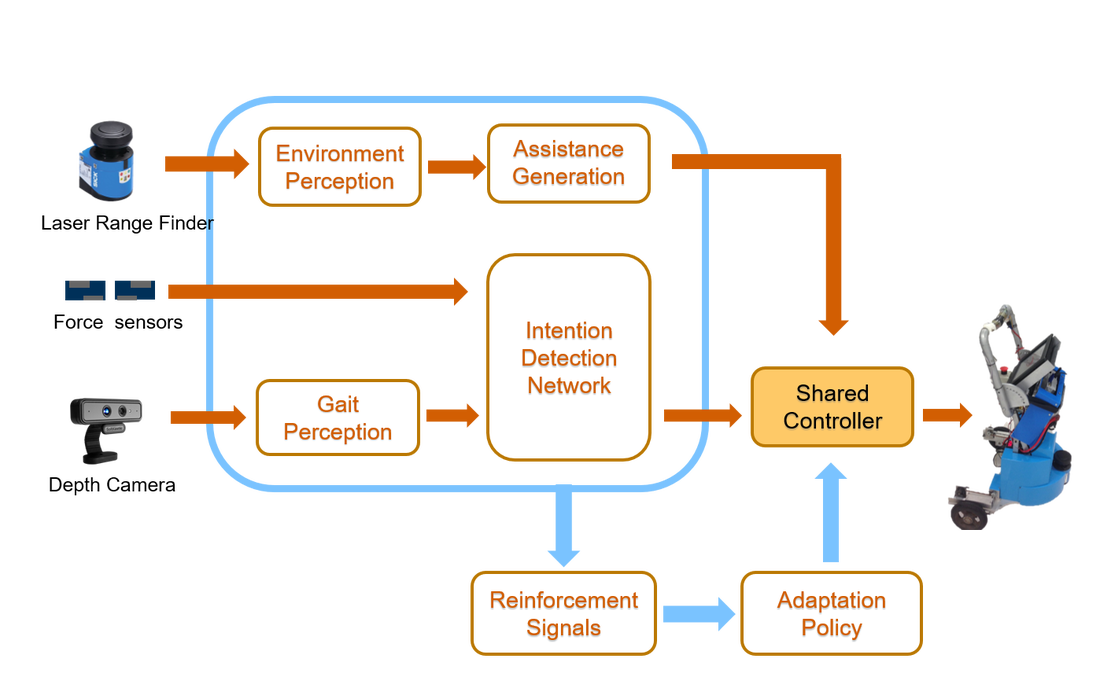

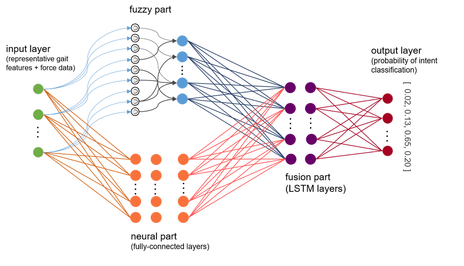

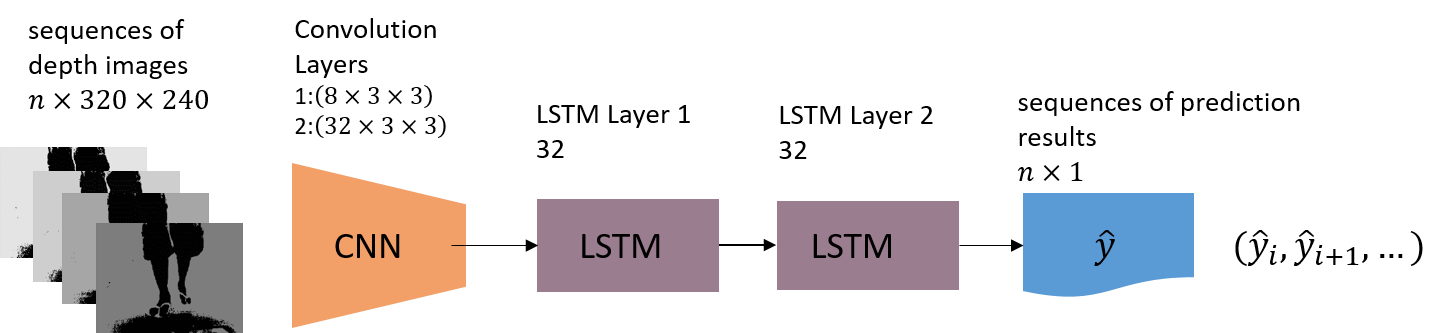

Learning-based Shared Control for A Smart Walker with

Multi-modal Interface

Walking-aid robot is developed as an assistance device for enabling safe, stable and efficient locomotion for elderly or disabled individuals. We propose a learning-based shared control system with multi-modal interface, containing both cognitive human-robot interaction (HRI) for gait analysis and traditional physical HRI for measuring user’s exerted force. The interface extracts navigation intentions from a novel neural network based method, which combines features from:

(i) a depth camera to estimate the user legs’ kinematics and to infer user orientation deviating from robot’s velocity direction, and

(ii) force sensors to measure the physical interaction between the human’s hands and the robotic walker.

Considering the robot’s ability to autonomously adapt to different user’s operation preference and motor abilities, we propose a reinforcement learning-based shared control algorithm which not only can improve the user’s degree of comfort while using the device but also can automatically adapt to user’s behavior.

(i) a depth camera to estimate the user legs’ kinematics and to infer user orientation deviating from robot’s velocity direction, and

(ii) force sensors to measure the physical interaction between the human’s hands and the robotic walker.

Considering the robot’s ability to autonomously adapt to different user’s operation preference and motor abilities, we propose a reinforcement learning-based shared control algorithm which not only can improve the user’s degree of comfort while using the device but also can automatically adapt to user’s behavior.

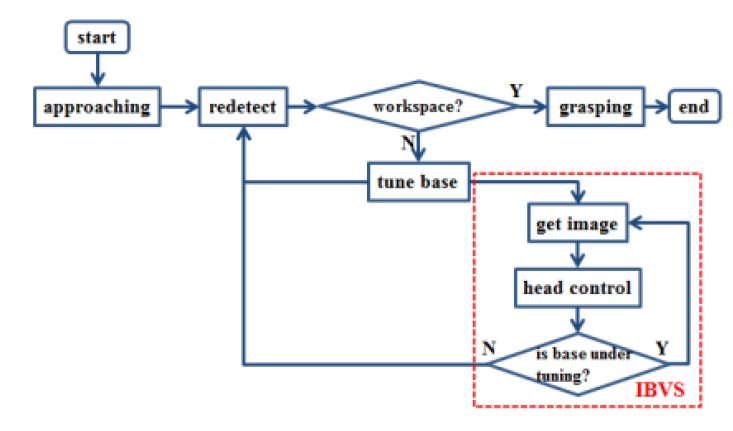

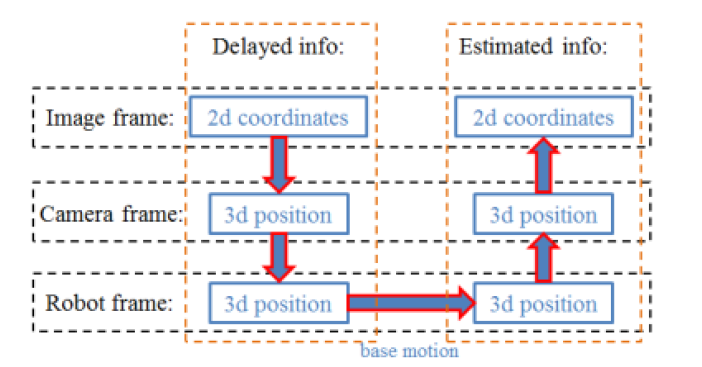

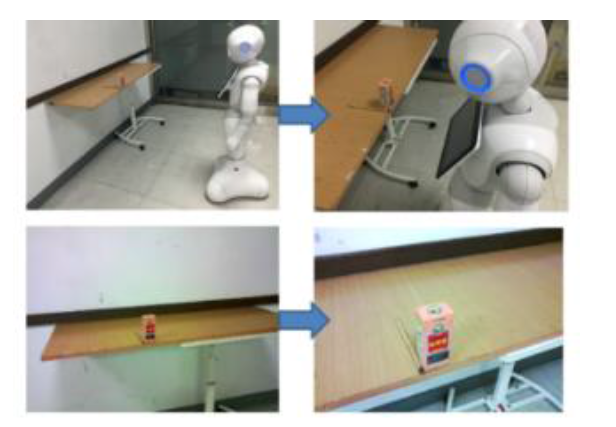

Visual Servoing with Time-delay Compensation for

Humanoid Mobile Manipulator

For a visual servo system, there usually exists the problem of time-delay likely caused by long image processing and data transmission. We propose a novel visual servo system to compensate the large time-delay, and we use integral of velocity which incorporating the image estimation and prediction with the kinematic model so as to achieve indirect velocity control with position based control mode joint. Our proposed visual servo system controls the neck joints of robot and keeps the object within the field of view of the camera during the approaching phase. We evaluate the proposed approach by experiment on a real wheeled humanoid robot and find the resulting performance quite promising, showing that our method is feasible and practical.

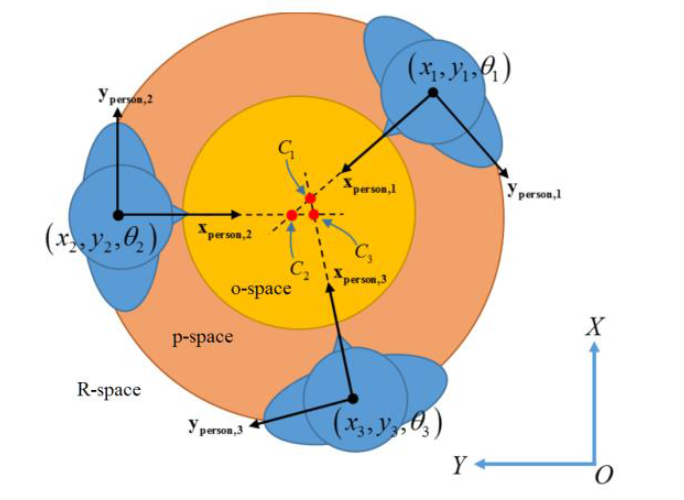

A Study on the Social Acceptance of a Robot in a Multi-Human Interaction Using an F-formation Based Motion Model

As robots participate in human’s daily activities more and more frequently, mobility performance has become one of the main factors determining how robots will share an environment with humans harmoniously in the near future. We propose a socializing model for the robot while participating in an interaction with a group of human peers to achieve its socially optimal position. From a theoretic perspective, we identify the most prominent features required for social acceptance of robots interacting with multiple humans, backing our arguments with relevant sociological theory. We have conducted experiments where human participants were invited to interact with a robot, which can be constrained to perform either holonomic or nonholonomic motions only.

研究概況 Research Introduction

我們的目標在於讓機器人能夠在真實環境中提供各式各樣的服務,並且改進人和機器人之間的互動。自從在軟體和硬體的改進後,機器人領域已經獲得廣泛的注意,因此本組想要將機器人研究更進一步,使得機器人能夠更加聰明以處理現實環境中的問題,為了能夠在可見的未來使機器人能有效的被應用,機器人必定必須和人群互動。所以我們的研究也是專注在人機互動以及人工智慧上,使機器人能夠了解人們的意圖並推論適當的反應。

Our goal is to make robots capable of providing services in the real environment and improving interactions between robots and

human. The mobile robot research has gained lots of attention since the breakthrough both on algorithms and hardware. Therefore,

we want to push it further, and make robots more intelligent to deal with the real world problems. In order to introduce robots to

the near future, the robots must have the ability to interact with people. As a result, our research also focused on H.R.I. and AI,

so the robot could understand the human intentions and infer the proper reactions.

目前本組大致有以下研究方向:

1. 機器人視覺 – 我們可以從影像獲得豐富的資訊,因此機器人組致力於發展機器人視覺系統。

A. 物體辨識 B. 姿態變識、人類辨識、臉部辨識 C. 視覺式SLAM

2. 移動機器人系統 – 我們與電機系傅立成老師的尖端控制實驗室合作

A. 控制系統:機器人導航、行為控制、路徑規劃 B. 定位

3. 人機互動

A. 知識庫推理 B. 強化學習

Currently, our group has following reserach direction:

1. Robot Vision – Our group have been focused on robot vision system because we can get rich information from cameras.

A. Object Recognition B. Tracking / Gesture Recognition / Human Detection / Face Recognition C. Visual SLAM

2. Mobile Robot System – We cooperate with Advanced Control Lab of EE department ( Also Prof. Fu’s Lab ).

A. Control System : Robot Navigation / Behavior Control / Path Planning B. Mapping and Localization

3. Human Robot Interaction

A. Knowledge Base Reasoning B. Reinforcement Learning

Our goal is to make robots capable of providing services in the real environment and improving interactions between robots and

human. The mobile robot research has gained lots of attention since the breakthrough both on algorithms and hardware. Therefore,

we want to push it further, and make robots more intelligent to deal with the real world problems. In order to introduce robots to

the near future, the robots must have the ability to interact with people. As a result, our research also focused on H.R.I. and AI,

so the robot could understand the human intentions and infer the proper reactions.

目前本組大致有以下研究方向:

1. 機器人視覺 – 我們可以從影像獲得豐富的資訊,因此機器人組致力於發展機器人視覺系統。

A. 物體辨識 B. 姿態變識、人類辨識、臉部辨識 C. 視覺式SLAM

2. 移動機器人系統 – 我們與電機系傅立成老師的尖端控制實驗室合作

A. 控制系統:機器人導航、行為控制、路徑規劃 B. 定位

3. 人機互動

A. 知識庫推理 B. 強化學習

Currently, our group has following reserach direction:

1. Robot Vision – Our group have been focused on robot vision system because we can get rich information from cameras.

A. Object Recognition B. Tracking / Gesture Recognition / Human Detection / Face Recognition C. Visual SLAM

2. Mobile Robot System – We cooperate with Advanced Control Lab of EE department ( Also Prof. Fu’s Lab ).

A. Control System : Robot Navigation / Behavior Control / Path Planning B. Mapping and Localization

3. Human Robot Interaction

A. Knowledge Base Reasoning B. Reinforcement Learning

Journal

- Shih-Huan Tseng, Yen Chao, Ching Lin, Li-Chen Fu. “Service robots: System design for tracking people through data fusion and initiating interaction with the human group by inferring social situations”, Robotics and Autonomous Systems, 2016.

- Chung Dial Lim, Chia-Ming Wang, Ching-Ying Cheng, Yen Chao, Shih-Huan Tseng, Li-Chen Fu. “Sensory Cues Guided Rehabilitation Robotic Walker Realized by Depth Image-Based Gait Analysis”, IEEE Transactions on Automation Science and Engineering, 13(1), 171-180, 2016.

- Chi-Pang Lam, Chen-Tun Chou, Kuo-Hung Chiang, and Li-Chen Fu. "Human-Centered Robot Navigation—Towards a Harmoniously human–Robot Coexisting Environment". IEEE Transactions on Robotics, Vol. 27, No. 1, Feb. 2011

- M.C. Shiu, L.C. Fu, H.T. Lee, F.L. Lian "Modular Design of Reconfigurable Electromagnetic Robots". Advanced Robotics, No. 7, Vol. 24, pp. 1059-1078, 2010

- Chih-Fu Chang and Li-Chen Fu. "Control Framework of A Multi-Agent System with Hybrid Approach". Journal of Chinese Engineering, 2006.

- Chih-Fu Chang, Jane Hsu and Li-Chen Fu. "Dynamic Game Based Hybrid Multi-Agent Control". International Journal of Electric Business Management, 2006.

Conference

- Shao-Hung Chan , Siquan Zeng , Chee-An Yu , Xiaoyue Xu , Ming-Li Chiang ,Li-Chen Fu,"Task-Oriented Navigation System for Dynamical Multiple Social Tasks of a Service Robot," ICCAS-2021

- Shih-Hsi Hsu, Shao-Hung Chan, Ping-Tsang Wu, Li-Chen Fu, "Distributed Deep Reinforcement Learning based Indoor Visual Navigation," IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018.

- Pei-Huai Ciou, Yu-Ting Hsiao, Zong-Ze Wu, Shih-Huan Tseng, Li-Chen Fu, "Composite Reinforcement Learning for Social Robot Navigation," IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018.

- Shao-Hung Chan, Ping-Tsang Wu, Li-Chen Fu, "Robust 2D Indoor Localization through Laser SLAM and Visual SLAM Fusion," IEEE International Conference on System, Men, and Cybernetics (SMC) 2018.

- Shih-An Yang, Edwinn Gamborino, Chun-Tang Yang, and Li-Chen Fu, “A Study on the Social Acceptance of a Robot in a Multi-Human Interaction Using an F-formation Based Motion Model,” IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2016.

- Jiangyuan Zhang, Vicente Queiroz, Zong-Ze Wu, Pei-Hwai Ciou, Shih-Hsi Hsu, Shih-Huan Tseng, and Li-Chen Fu, “Visual Servoing with Time-delay Compensation for Humanoid Mobile Manipulator,” IEEE International Conference on System, Men, and Cybernetics (SMC) 2016.

- Yu-Chi Lin, Shao-Ting Wei, Shih-An Yang, Li-Chen Fu "Planning on searching occluded target object with a mobile robot manipulator, " Robotics and Automation (ICRA), 2015 IEEE International Conference on , vol., no., pp.3110-3115, 26-30 May 2015.

- Pei-Wen Wu, Yu-Chi Lin, Chia-Ming Wang, Li-Chen Fu "Grasping the object with collision avoidance of wheeled mobile manipulator in dynamic environments, " Intelligent Robots and Systems (IROS), 2013 IEEE/RSJ International Conference on , vol., no., pp.5300-5305, 3-7 Nov. 2013.

- Chia-Ming Wang, Shin-Huan Tseng, Pei-Wen Wu, Yuan-Han Xu, Chien-Ke Liao, Yu-Chi Lin, Yi-Shiu Chiang, Chung-Dial Lim, Ting-Sheng Chu, Li-Chen Fu, "Human-oriented recognition for intelligent interactive office robot, " Control, Automation and Systems (ICCAS), 2013 13th International Conference on , vol., no., pp.960-965, 20-23 Oct. 2013.

- Ming-Fang Chang, Wei-Hao Mou, Chien-Ke Liao, Li-Chen Fu, "Design and implementation of an active robotic walker for Parkinson's patients, " SICE Annual Conference (SICE), 2012 Proceedings of , vol., no., pp.2068-2073, 20-23 Aug. 2012.

- Yu, Kuan-Ting, Shih-Huan Tseng, and Li-Chen Fu. "Learning Hierarchical Representation with Sparsity for RGB-D Object Recognition," IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2012.

- Wei-Hao Mou, Ming-Fang Chang, Chien-Ke Liao, Yuan-Han Hsu, Shih-Huan Tseng, and Li-Chen Fu. "Context-Aware Assisted Interactive Robotic Walker for Parkinson’s Disease Patients," IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2012.

- Kai Siang Ong, Yuan-Han Hsu,and Li-Chen Fu. "Sensor Fusion Based Human Detection and Tracking System for Human-Robot Interaction," IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2012.

- Ju-Hsuan Hua, Shih-Huan Tseng, Shao-Po Ma, Jie Fu, Li-Chen Fu "Decision-Networks based Attentive HRI by Reasoning Human Intention andPreferences," The 43rd Intl. Symp. on Robotics (ISR2012),Taipei, Taiwan, Aug. 29-31, 2012.

- Kuo-Chen Huang, Shih-Huan Tseng, Wei-Hao Mou, and Li-Chen Fu. "Simultaneous localization and scene reconstruction with monocular camera," Robotics and Automation (ICRA), 2012 IEEE International Conference on , vol., no., pp.2102-2107, 14-18 May 2012.

- Shih-Huan Tseng et al. "Intelligent interactive robot in an office nvironment," Proceedings of 2011 International Conference on Service and Interactive Robots.

- Chen Tun Chou, Jiun-Yi Li, Ming-Fang Chang, and Li Chen Fu. "Multi-robot Cooperation Based Human Tracking System Using Laser Range Finder," IEEE International Conference on Robotics and Automation, 2011( ICRA 2011).

- Kuan-Ting Yu, Chi-Pang Lam, Ming-Fang Chang, Wei-Hao Mou, Shi-Huan Tseng, and Li-Chen Fu, "An Interactive Robotic Walker for Assisting Elderly Mobility in Senior Care Unit". 2010 IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO 2010).

- Kuo-Chen Huang, Jiun-Yi Li, and Li-Chen Fu, "Human-Oriented Navigation for Service Providing in Home Environment", Proceedings of SICE Annual Conference 2010 (SICE 2010)

- Chih-Fu Chang and Li-Chen Fu, "Dynamic state feedback control of robotic formation system", IEEE IROS 2010, pp. 3574-3579.

- Wei-Jen Kuo, Shih-Huan Tseng, Jia-Yuan Yu, and Li-Chen Fu, "A hybrid approach to RBPF based SLAM with grid mapping enhanced by line matching," IEEE/RSJ International Conference on Intelligent Robots and Systems, 2009 (IROS 2009).

- Guan-Hao Li, Chih-Fu Chang and Li-Chen Fu, "bSLAM navigation of a Wheeled Mobile Robot in presence of uncertainty in indoor environment," IEEE ARSO 2009, pp. 6-11.

- Guan-Hao Li, Chih-Fu Chang and Li-Chen Fu, "Navigation of a wheeled mobile robot in indoor environment by potential field based-fuzzy logic method," IEEE ARSO 2008, pp. 1-6.

- Chih-Fu Chang and Li-Chen Fu, "A formation control framework based on Lyapunov approach," IEEE IROS 2008, pp. 2777-2782.

- Chih-Fu Chang and Li-Chen Fu, "A Study of Nonholonomic Formation Dynamics: From A Perspective of Interactive Point," IEEE IFAC 2008.

- Chi-Pang Lam, Wei-Jen Kuo, Chun-Feng Liao, Ya-Wen Jong, Li-Chen Fu, Joyce Yen Fen, "An Efficient Hierarchical Localization for Indoor Mobile Robot with Wireless Sensor and Pre-Constructed Map," The 5th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI 2008).

- M.C. Shiu, F.L. Lian, L.C. Fu, H.T. Lee, ""Magnetic Force Analysis for the Actuation Design of 2D Rotational Modular Robots"," in Proc. The 33rd Annual Conf. of the IEEE Industrial Electronics Society, Taipei, pp. 2236-2241, 2007.

- Chih-Fu Chang and Li-Chen Fu, ""A Control Framework of a Multiagent System with Hybrid System Approach"," IEEE ICRA 2006.

- Chih-Fu Chang and Li-Chen Fu, "" Modeling and Stability Analysis of a Nonholonomic Multi-Agent Formation Problem"," IEEE SMC 2006.

- Chih-Fu Chang and Li-Chen Fu, ""Analysis of a Behavior Based Nonholonomic Wheeled Mobile Robot Control with Hybrid System Approach"," invited section, SCIE-ICCAS, 2006.

- Chih-Fu Chang, Chun-Po Huang, Li-Chen Fu, "Design an Adaptive Control Using Supervisory Approach for Mobile Manipulator," International Conference on Automation Technology, 2005.

- Chih-Fu Chang, Chun-Po Huang, Li-Chen Fu, Yu-Ming Yi, Yu-Po Hsu, Dai-Jei Tzou, Jerry Chen, Joseph Lai and I-Fan Lin,"A New Hybrid System Approach to Design of Controller Architecture of a Wheeled Mobile Manipulator," International Conference on Intelligent Robots and Systems, 2005 Aug IEEE.

- Yung-Hao Chen, Chih-Fu Chang, ""An Implementation of a Real-Time Vision Algorithm Using FPGA","Automation 2005.

- Chih-Fu Chang, Chung-Po Huang and Li-Chen Fu, ""Design an Adaptive Control Using Supervisory Approach for Mobile Manipulator"," Automation 2005.

- Chih-Fu Chang, Chun-Po Huang, Li-Chen Fu, Yu-Ming Yi, Yu-Po Hsu, Dai-Jei Tzou, Jerry Chen, Joseph Lai and I-Fan Lin, ""A New Hybrid System Approach to Design of Controller Architecture of a Wheeled Mobile Manipulator","IEEE IROS 2005.

- Chih-Fu Chang; C.-I Huang; Li-Chen Fu, "Nonlinear control of a Wheeled Mobile Robot with Nonholonomic Constraints,"IEEE International Conference on Systems, Man and Cybernetics, Volume 6, Page(s):5404 - 5410, Oct. 10-13, 2004.

- Chih-Fu Chang and Li-Chen Fu, ""Nonlinear control of a Wheeled Mobile Robot with Nonholonomic Constrains","IEEE SMC 2004.

- Cheng-Ming Huang, Su-Chiun Wang, Chih-Fu Chang, Chin_I Huang, Yu-Shan Cheng, Li-Chen Fu, ""An Air Combat Simulator in the Virtual Reality with the Visual Tracking System and Force-Feedback Components","IEEE CCA 2004.

Master Thesis

- 涂志宏" 基於視覺語言模型及認知地圖之機器人室內環境問答系統A Robot System for Indoor Environment Question Answering with Cognitive Map Leveraging Vision-language Models,"Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2023

- 游祖霖" 在比例未知的樓層平面圖上進行基於空間圖形方法的定位與導航Spatial Graph-based Localization and Navigation on Scaleless Floorplan,"Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2023

- 陳建婷" 基於人類行為及認知記憶之物件目標導航居家照護型機器人Object-Goal Navigation of Home Care Robot based on Human Activity Inference and Cognitive Memory,"Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2023

- 湯嘉懿" 基於視覺之步態分析的跌倒預測機器人Vision-based Gait Analysis Robot for Fall Prediction,"Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2023

- 曾思銓" 應用於人機互動的三維激光雷達多目標分割追蹤系統Social Crowd Navigation of a Mobile Robot based on Human Trajectory Prediction and Hybrid Sensing,"Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2022

- 陳顥云" 基於混合式感測及周遭行人軌跡預測的移動型機器人在人群中之社交導航Social Crowd Navigation of a Mobile Robot based on Human Trajectory Prediction and Hybrid Sensing,"Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2021

- .王俊傑 “應用於居家服務機器人之人物互動圖卷積神經網路人類行為辨識系統Human Activity Recognition System for Home Service Robot Utilizing Adaptive Graph Convolutional Network and Human Object Interaction,” Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2021

- 徐瀟越“基於光達與相機融合之三維語意地圖之靈巧運動機器人Agile Movement Mobile Robot under 3D Semantic Map Built by LiDAR and Camera Fusion,” Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2021

- 余奇安”融合天花板與地面互補式圖資訊於複雜環境之定位導航系統Complex Environment Localization System Using Complementary Ceiling and Ground Map Information,” Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2020

- 張天時”擬人之行走:依第一人稱視角進行社交導航方向之全向輪機器人

Walk Like a Human: Social Navigation of Omnidirectional Robot based on First Person View,” Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2019 - 朱啟維”視覺對話:基於影像的中文回覆生成Visual Chat: Image Grounded Chinese Response Generation,” Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2019

- 蕭羽庭”自傳式記憶輔助型對話系統Dialogue System Assisted by Autobiographical Memory,” Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2019

- 詹少宏”使移動機器人執行動態多社交任務之最佳化導航系統Dialogue System Assisted by Autobiographical Memory,” Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2019

- Vicente Queiroz”基於使用者活動識別之日常生活推論的情境互動提醒機器人

Contextual Reminder Robot by inferring User’s Daily Routine based on Human Activity Recognition,” Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2018 - 吳秉蒼 "以多層環境可供性地圖達成強健室內定位、人類活動事件偵測以及社交友善導航 Robust Indoor Localization, Human Event Detection and Socially Friendly Navigation Based on Multi-Layer Environmental Affordance Map," Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2018.

- 楊峻棠 "使用延伸社交力模型之社交感知全向性移動 機器人導航應用於多人室內環境 A Socially-Aware Navigation of Omnidirectional Mobile Robot with Extended Social Force Model in Multi-Human Indoor Environment," Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2018.

- 邱沛淮 "以合成強化式學習之適應行為學習之社交機器人導航Adaptive Behavior Learning Social Robot Navigation with Composite Reinforcement Learning", Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2017.

- 愛德溫 "以混合互動強化學習方式輔助機器人行動計劃用於孩童情感支持 Hybrid Interactive Reinforcement Learning based Assistive Robot Action-Planner for the Emotional Support of Children", Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2017.

- 徐世曦 "基於圖像嵌入與深度強化學習之無地圖室內視覺導航 Map-less indoor visual navigation based on image embedding and deep reinforcement learning", Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2017.

- 錢曉蓓 "具有多模式介面的輔助行走機器人之共同控制策略 Learning-based Shared Control for A Smart Walker with Multimodal Interface", Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2017.

- 楊時安 "基於全向移動性之社交感知機器人導航系統 Socially-Aware Robot Navigation System based on Omnidirectional Mobility", Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2016.

- 張江元 "具時間延遲補償之視覺伺服於人形移動式機械臂 Visual Servoing with Time-delay Compensation for Humanoid Mobile Manipulator", Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2016.

- 林友騏 "基於搜尋演算法之規劃移動式機器手臂尋找與夾取遮蔽目標物 Search Algorithm based Planning on Finding and Grasping of Occluded Target Object with a Mobile Robot Manipulator," Master Thesis, institute of Electrical Engineering, National Taiwan University, R.O.C., 2014.

- 吳佩文 "利用彩色深度相機建立移動型機械手臂於動態環境中抓取物體系統 Manipulator Grasping on a Mobile Platform with Help from RGB-D Cameras in Dynamic Environments ," Master Thesis, Institute of Electrical Engineering, National Taiwan University, R.O.C., 2013.

- 張明芳 "應用於辦公室機器人服務之基於異質特徵的語意地圖建置Building Semantic Map in Office Environment using Heterogeneous Feature-based Registration for Robotic Service ," Master Thesis, Institute of Electrical Engineering, National Taiwan University, R.O.C., 2012.

- 王祈翔 "應用多感測器之融合於人機互動之人員偵測與追蹤系統 Sensor Fusion Based Human Detection and Tracking System for Human-Robot Interaction ," Master Thesis, Institute of Electrical Engineering, National Taiwan University, R.O.C., 2012.

- 李俊毅 "於多人環境中基於情境感知的智慧型機器人導航 Context-Aware Navigation of Intelligent Mobile Robot in Multi-human Environment," Master Thesis, Institude of Electrical Engineering, National Taiwan University, R.O.C., 2011.

- 周楨惇 "應用雷射測距儀於多機器人合作之人員追蹤系統 Multi-robot Cooperation Based Human Tracking System Using Laser Scanner," Master Thesis, Institude of Electrical Engineering, National Taiwan University, R.O.C., 2010.

- 余嘉淵 "具有彈性的特徵點選擇策略應用於強化機器人之同時定位與建立地圖系統 A Flexible Feature Selection Strategy for Improving Bearing-only SLAM," Master Thesis, Institude of Electrical Engineering, National Taiwan University, R.O.C., 2010.

- 李貫豪"運用bSLAM修正輪型機器人室內導航之不確定性誤差bSLAM Navigation of a Wheeled Mobile Robot in Presence of Uncertainty in Indoor Environment," Master Thesis, Institude of Electrical Engineering, National Taiwan University, R.O.C., 2009.

- 彭禹安,"大環境之機器人視覺特徵擷取環境定位 Vision-based Global Localization of Large-Scale Indoor Environments with Hierarchical Map”," Master Thesis, Institude of Electrical Engineering, National Taiwan University, R.O.C., 2009.

- 吳俊逸, " 利用整合式單眼視覺之機器人同步自我定位及建立地圖系統實現大範圍之室內環境探索 An Integrated Robotic vSLAM System to Realize Exploration in Large Indoor Environment," Master Thesis, Institude of Electrical Engineering, National Taiwan University, R.O.C., 2007.

- 陳任志, "Ellipsoid aMAPRM中間軸偵測與Voronoi邊界取樣之機器人路徑規劃應用 PATH PLANNING: Hybrid Ellipsoid aMAPRM Using Adaptive Voronoi-cuts," Master Thesis, Institude of Electrical Engineering, National Taiwan University, R.O.C., 2006.

- 游名沂 "Bandwidth Control Based on Wireless LAN for Applications in Multi-robot Cooperative Systems," Master Thesis, Institude of Electrical Engineering, National Taiwan University, R.O.C., 2005.

Vision-based Gait Analysis Robot for Fall Prediction

Dissertation

- 許銘全," 八邊形模組式機器人之設計、控制及重組規劃 Design, Control,and Reconfiguration Planning for Octagonal Modular Robot," Dissertation, Institute of Electrical Engineering, National Taiwan University, R.O.C., 2010.